Big O Notation

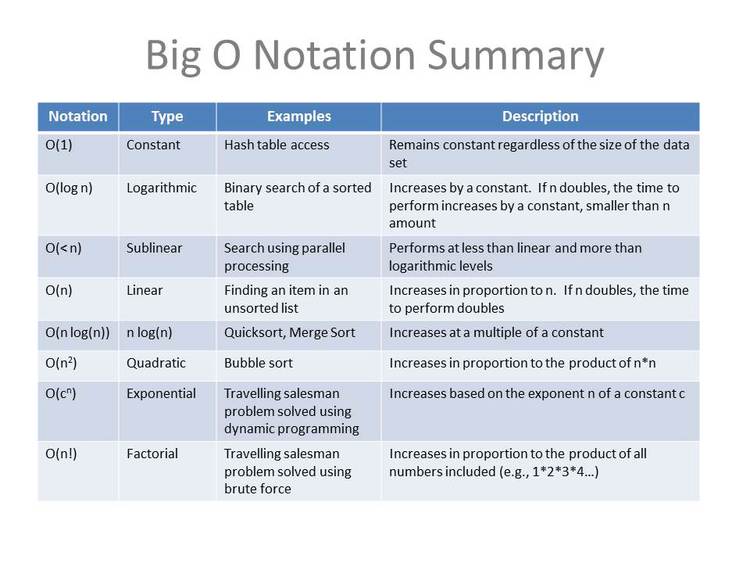

Big O notation is a way to characterize the time or resources needed to solve a computing problem, including those encountered in Machine Learning and AI. It's particularly useful in comparing various computing algorithms under consideration. Below is a table summarizing Big O functions. The four most commonly referenced and important to remember are:

- O(1) Constant access time such as the use of a hash table.

- O(log n) Logarithmic access time such as a binary search of a sorted table.

- O(n) Linear access time such as the search of an unsorted list.

- O(n log(n)) Multiple of log(n) access time such as using Quicksort or Mergesort.